Architecture 1 : Varnish behind the Amazon Route53

In the above architecture , we have placed Varnish Page Cache behind the Amazon Route53 DNS. When the user request for www.yourApp.com reaches the Amazon Route53 , it uses Round Robin algorithm and sends the PublicIP address of the Varnish EC2 instances. Each Varnish EC2 instance is m1.large instance type ( 64bit , 2 cores , 7GB RAM , guaranteed IO) and attached with Amazon ElasticIP. Varnish may work on 32 bit , but it is highly recommended to run it on 64 bit OS with enough memory. In our use case we had chosen m1.large instance type because it comes with 7GB RAM and guaranteed IO. You can chose larger memory instance type in AWS depending upon the memory usage requirements.

Web apps like online News , classifieds , social sites depend a lot on Page caching for web acceleration. In such use cases it is better to run Varnish on High availability mode to avoid Cache Stampede.

Since Varnish is a critical component of this web app architecture it is deployed with High Availability mode in 2 different Availability Zones inside a single Amazon region. So even if single Varnish EC2 fails or Entire AWS AZ fails your site is still functional because of HA. The above architecture has only partial HA built in , let us see why : If User-1 is served by Varnish-A and User-2 is served by Varnish-B , if varnish-A goes down then User-1 will try to access the varnish-A IP and it will fail with error page. Since we had configured DNS round Robin at Route53 , the Secondary Varnish-B IP will also be passed to user-1 browser . Now the browser will access the website through Varnish-B. This is not a fully HA architecture because the User-1 can get server error page at times.

Each Varnish EC2 server is configured with Round Robin director , so that they deliver the non cached HTTP requests to Back end web/app servers. Varnish can be configured with only Round Robin or Random directors currently. It will be really useful if they can add Weighted or least connection directors in future. Also the Health checks are configured in the Varnish EC2 server to direct the HTTP load to healthy back end web servers.

EBS Backed Red Hat EC2 instance is used for Varnish Servers so that varnish log files can be persistently stored in EBS volumes to overcome EC2 failures.

Varnish Logs in Amazon EBS storage will be particularly helpful if you are going to power down your Varnish server, but don’t want to start over from scratch with your cache. Varnish can pre-load URL’s and perform cache warming using "varnishlog" and "varnishreplay" commands. First, you will need to capture a log file. Use the following command to write a log file

varnishlog -D -a -w /var/log/varnish.log

Flags: -D works in daemon mode, -a , then -w appends data to the proceeding file.

Once you have downed your server and erased your cache, run the following command to replay or reproduce the contents of your log file.

varnishreplay -r /var/log/varnish.log

Flags: -r defines the log file to use, which is mandatory.

We use Munin plugin in this use case to monitor the varnishstat. Munin is a monitoring tool with a plug-in framework for Varnish page cache. Munin nodes periodically report back data to a Munin server. The Munin server collects the data and generates an HTML page with graphs.

No Backup is configured for Varnish EC2 because it is not a persistence cache.

Only EBS snapshots are taken whenever there is Varnish configuration change.

Architecture 2 : Varnish behind the Amazon Elastic Load balancer

In the above architecture , we have placed Varnish Page Cache behind the Amazon ELB. When the user request for www.yourApp.com reaches the Amazon Route53 , it passes the request to Amazon Elastic Load Balancer. Amazon ELB uses Round Robin algorithm and sends requests to Varnish Cache. Each Varnish EC2 instance is m1.large instance type ( 64bit , 2 cores , 7GB RAM , guaranteed IO) and attached with Amazon Elastic Load Balancer. ElasticIP is not needed for the Varnish instances because they can talk to ELB using privateIP's. EBS Backed Red Hat EC2 instance is used for Varnish Servers like the previous architecture so that varnish log files can be persistently stored in EBS volumes and pre loaded or cache warmed quickly during EC2 failures.

Varnish is running on HA mode in 2 Availability Zones inside a Region. Amazon ELB is configured with Health checks to pass the request to healthy Varnish EC2 instances. Both the Varnish instances almost contain same page cache entries and it is not distributed setup. Varnish is not setup in Auto Scaling mode. Advantage of this setup is that in event of a Varnish EC2 failure , requests can be redirected to another Varnish Server seamlessly. In event of AZ failure in Amazon cloud , ELB will transfer the request to Varnish deployed in other AZ.

Some of the negatives of this setup are , having Amazon ELB before Varnish increases the response latency by <150ms (this latency delay might not be acceptable in some use cases). All the Varnish EC2 instances under the ELB needs to be cache warmed properly and frequently , or else it will lead to Cache stampede of the Web/App servers. Distributed Varnish Cache cannot be setup under Amazon ELB because ELB cannot redirect HTTP requests to multiple Varnish based on multiple URLs.

Like the previous architecture , Varnish EC2 servers are configured with Round Robin director in this setup, so that they deliver the non cached HTTP requests to Back end web/app servers(Varnish can be configured with only Round Robin or Random directors currently).

Munin plugin is configured in this use case to monitor the varnishstat. No Backup is configured for Varnish EC2 because it is not a persistence cache.

Only EBS snapshots are taken whenever there is Varnish configuration change.

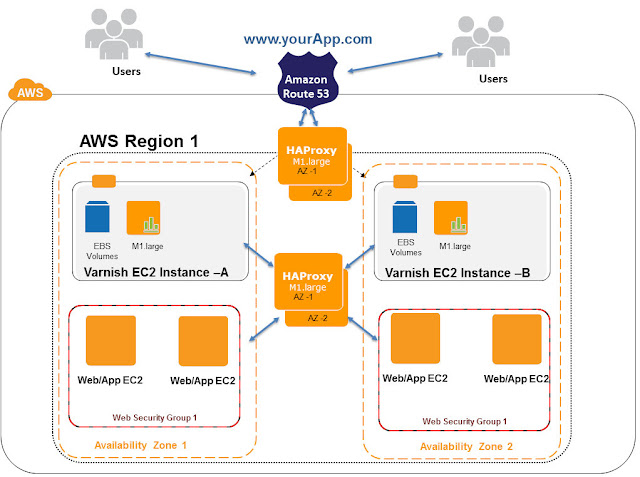

Architecture 3 : Varnish behind the Reverse Proxy ( HAProxy or NginX)

In the above architecture , we have placed Varnish Page Cache behind the Reverse Proxy - HAProxy load balancer. When the user request for www.yourApp.com reaches the Amazon Route53 , it passes the request to HAProxy. Each HAproxy is a Red Hat EC2 m1.large instance. HAProxy uses Round Robin algorithm and sends requests to Varnish Cache. Each Varnish EC2 instance is m1.large instance type ( 64bit , 2 cores , 7GB RAM , guaranteed IO) and attached with HAProxy. ElasticIP/privateIP can be used to attach Varnish EC2 to HAProxy. In this use case we have configured HAProxy with Varnish PrivateIPs. EBS Backed Red Hat EC2 instance is used for Varnish Servers like the previous architecture ,so that varnish log files can be persistently stored in EBS volumes and pre loaded or cache warmed quickly during EC2 failures.

Varnish is running on HA mode in 2 Availability Zones inside a Region. HAproxies are configured with Health checks to pass the request to healthy Varnish EC2 instances. Varnish EC2 Instances can be distributed using HAproxies in this architecture. Example www.yourApp.com/appcomp1/* can be directed Varnish-A -and www.yourApp.com/appcomp2/* can be directed to Varnish-B . Both varnishes can contain different cache entries in this setup. Varnish Cache Distribution and HA setup can be achieved in this architecture using HAProxy.

Varnish Cache can be setup in Auto Scaling mode , but usually this is not recommended because of Cache Warming and Stampede problem unless the use case demands.

Advantage of this setup is that in event of a Varnish EC2 failure , requests can be redirected to another Varnish Server seamlessly. In event of AZ failure in Amazon cloud , HAproxy will transfer the requests to Varnish deployed in another AZ seamlessly. Also Varnish Cache Distribution and HA can be achieved in this architecture. Some of the negatives of this setup are , having HAProxy before Varnish increases the response latency by <100ms (this latency delay might not be acceptable in some use cases). All the Varnish EC2 instances under the HAproxy needs to be cache warmed properly and frequently , or else it will lead to Cache stampede of the Web/App servers. Additional infra and maintenance cost for running layers of HAproxies.

Varnish EC2 servers are configured with Round Robin director to pass the requests to level-2 HAproxy servers in this setup, HAProxy Servers in Level-2 can direct load with variety of LB algorithms like Round Robin , Weighted , Least connection etc to the Web/App layer. Since the Number of web/App servers under the Varnish are abstracted through HAProxy , we can seamlessly auto scale the number of Web/App servers and hot reconfigure the HAproxies accordingly. This helps us to design Highly scalable web application layers. This architecture is recommended for use cases where Elastic Web/App layers are needed under Varnish with flexibility in LB algorithms in AWS. As seen in above cases , Munin plugin is configured to monitor the varnishstat. No Backup is configured for Varnish EC2 because it is not a persistence cache. Only EBS snapshots are taken whenever there is Varnish configuration change.

Related Articles

FAQ - Varnish on Amazon EC2

Configuring Varnish on AWS

Designing Web/App's with Varnish Page Cache in AWS

Performance tuning & Varnish Best practices on AWS

8 comments:

ELB can detect Instance Health.

ELB's also support autoscaling.

Great Article! Really Useful!

In the last method, the HAProxy is also running on an EC2 instance, right? So it has to be in some AZ in the region, what if that AZ fails? In singapore there are 2 AZs & in if you deploy your HAProxy in AZ1 then you are protected against failure in AZ2 only, if AZ1 goes down there's not much you can do.

Hi Anand,

There are 2 HAProxies deployed for high availability across AZ failures. even if one HAproxy fails because of AZ failure, the other HAproxy deployed in alternate AZ will keep the site active.

harish

Hi Harish,

Thanks for the reply.

I agree with your argument, but since Route 53 does not have health check, it can still direct some users to an HAProxy that is in an AZ that has gone down, right? These users will continue to face down time.

Anand

My bad. It seems Route 53 indeed does health checks if configured to do so.

Thanks for the informative article.

Post a Comment